Test Target

To do load test to make sure whether vendor network can handle 40000 concurrent requests.

Test Plan

We need clarify a number of issues before testing, so we should communicate with others from different

teams and your manager in time. issues includes

How many available network bandwidth can be used for testing? (IT department, Operation team)

How many VMs can meet the testing requirement? (IT department, Operation team)

How to assign VMs to different sites is rational? (IT department, Operation team)

…

Test Environment

VMs - total 80 VMs, 4 in Hefei(HF), 4 in Hangzhou(HZ) and 72 in US

HF site: 4 VMs

CPU - Intel Xeon(R) CPU E5520 @ 2.27G 2.26G

MEM - 8G

Disk - 50G

OS- Win7 Enterprise

HZ site: 4 VMs

E7-2870 @2.4G 2.4G

MEM: 8 G

Disk: 150G

OS- Win7 Enterprise

US: 72 VMs

CPU: Intel(R) Xeon(R) CPU E7-2870 @2.4G 2.4G

MEM: 8 G

Disk - 80G

OS - Win7 Enterprise

Testing tool - JMeter v3.0 or the latest version

Use JMeter to do distributed testing, Before we start, there are a couple of things to check.

- the firewalls on the systems are turned off.

- the clients (slave) are on the same subnet.

- the server(master) is in the same subnet,

- Make sure JMeter can access the server.

- Make sure you use the same version of JMeter on all the systems. Mixing versions may not work correctly.

Master – the system running Jmeter in non-GUI mode, which controls the test

Slave – the system running jmeter-server, which takes commands from the master and send requests to the target system(s)

Target – the webserver we plan to stress test

Test Steps

Make sure all VMs and machines installed JDK1.8

Unzip Jmeter package to any a directory

Config jmeter configuration file (

- Add slave IP to “remote_hosts” in configuration, suggest to remove 127.0.0.1 or localhost as slave

- Increase the value of “jmeter.exit.check.pause”, which default value is 2000ms if the JVM has failed to exit.

On the slave systems, go to jmeter/bin directory and execute “jmeter-server.bat”

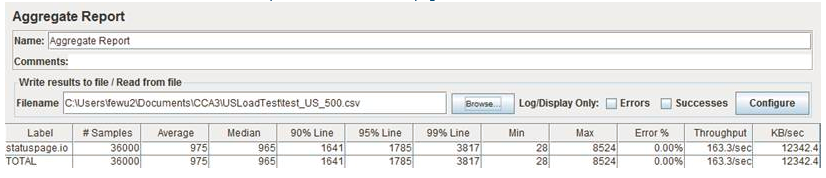

On the master system, execute the command to start testing in JMeter non-GUI mode

jmeter -n -r -t testplan.jmx -l report.csv

To show aggregate report, add an aggregate report then choose the report.csv. Try again if report show abnormally.

Test Results

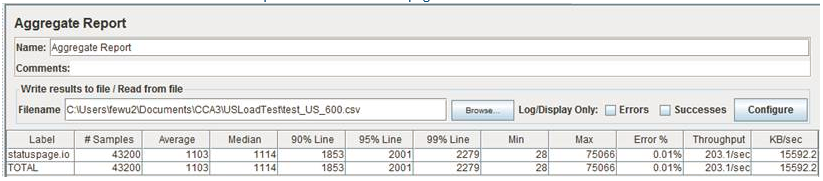

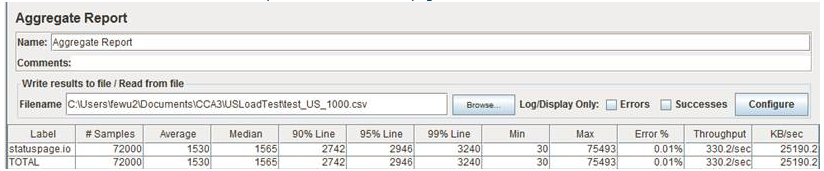

Indicator definitions:

Samples - the number of http request ran for given thread.

Average - the average time of a set of results.

Median - the time in the middle of a set of results. 50% of the samples took no more than this time; the remainder took at least as long.

90% Line - 90% of the samples took no more than this time. The remaining samples took at least as long as this.

95% Line - 95% of the samples took no more than this time. The remaining samples took at least as long as this.

99% Line - 99% of the samples took no more than this time. The remaining samples took at least as long as this.

Min - The shortest time for the samples with the same label.

Max - The longest time for the samples with the same label.

Error % - Percent of requests with errors.

Throughput - The number of requests per seconds that are sent to test machine during the test.

KB/sec - The throughput measured in Kilobytes per second.

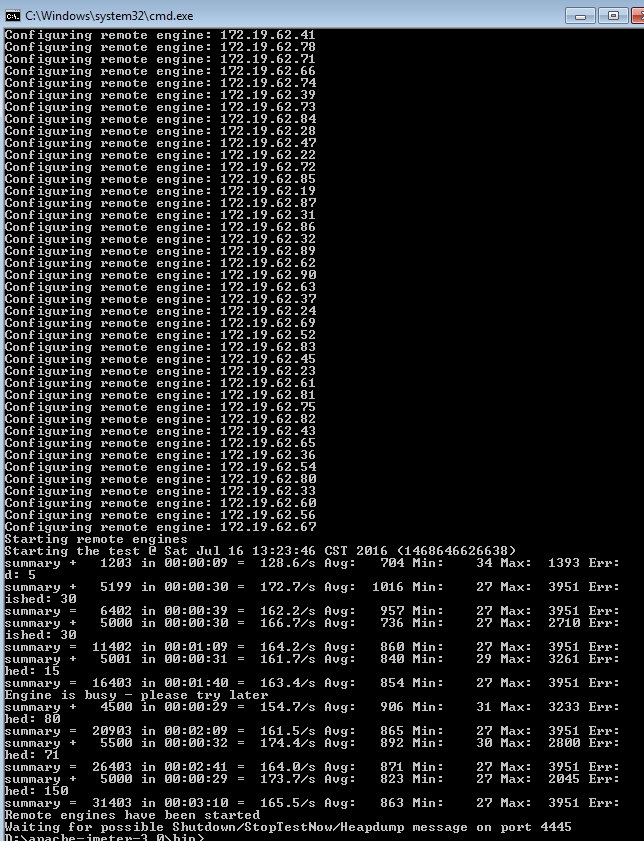

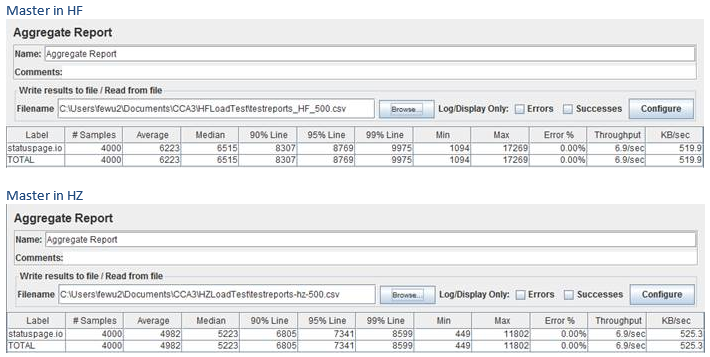

Load test with 8 VMs 4 salves in HF, 4 salves in HZ

1.1 Each VM sends 500 concurrent requests

1.2 Each VM send 1000 concurrent requests

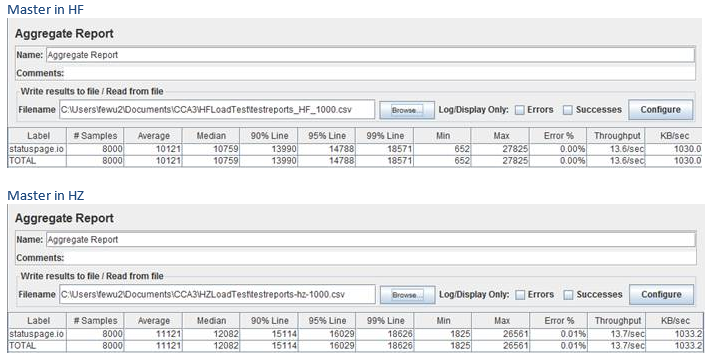

Load test with 72 VMs in US

2.1 Each VM sends 500 concurrent requests

2.2 Each VM sends 600 concurrent requests

2.3 Each VM sends 1000 concurrent requests

Notes

Estimate the available BW for testing need think about some factors, to avoid unnecessary troubles.

Currently, Total network bandwidth(BW) is XXX Mbps in HF site, HZ site has the same.

We take advice from IT guys, about YYYMbps can be used for this testing.

500 concurrent requests will generate 3MB data traffic per second (24Mbps) after test in my machine,

so it is why we only use 4 VMs in HF/HZ.